Can artists really stop AI from stealing their work?

Misshapen eyes and hands with too many fingers once made AI-generated art easy to spot. Now, as the technology advances, it’s becoming harder to tell human work from machine-made creations.

With some fearing the replacement of human creatives, AI-generated art has plenty of detractors. “Algorithm aversion,” the bias against AI-created work, seems to only be growing, and just 20% of U.S. adults think AI will have a positive impact on arts and entertainment.

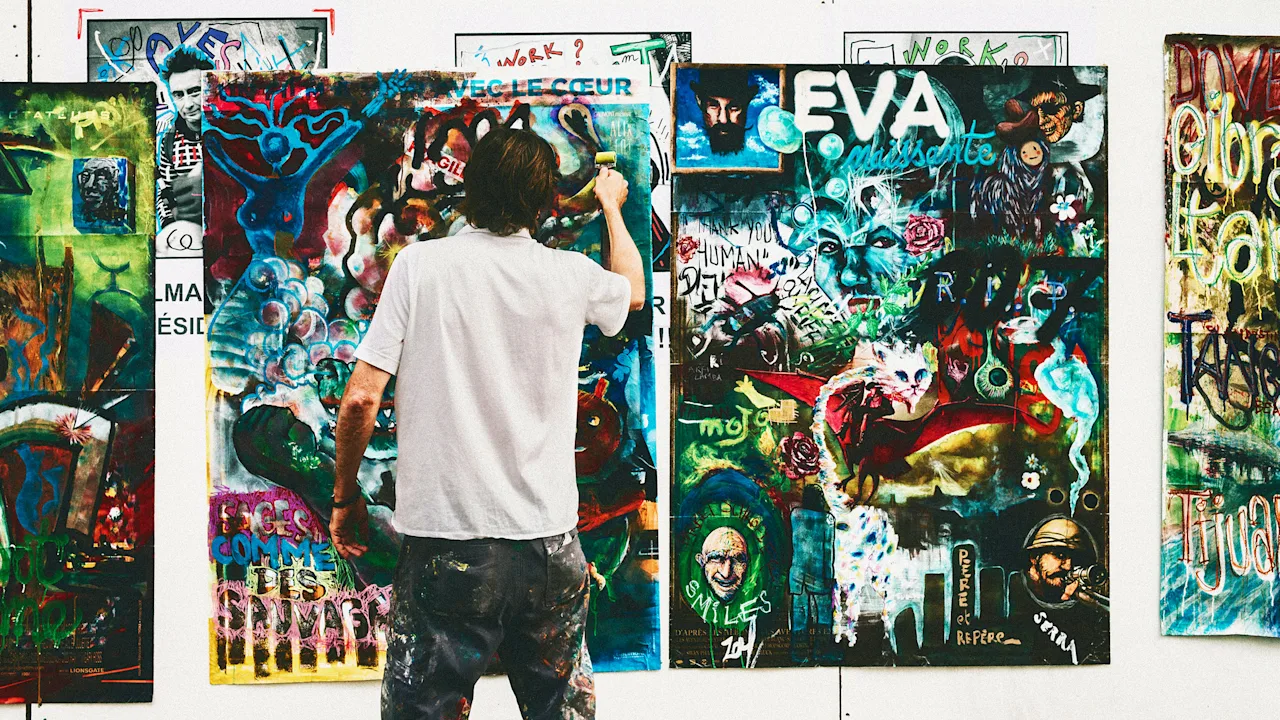

Artists are among the most vocal opponents, not only because AI is already cutting into their income as more image needs are met by machines, but also because the models may have been trained on their own work.

“This is a very critical topic,” says Philip Rieger, a postdoctoral researcher at the Technical University of Darmstadt. AI can easily imitate and manipulate images, violating artists’ copyrights, he adds.

In response, artists have filed lawsuits alleging AI companies violated intellectual property laws by training on copyrighted works. Others are turning to digital tools to shield their art. But experts say artists’ work remains vulnerable—more than they may realize.

How does AI violate artists’ copyrights?

Popular generators like Midjourney and OpenAI’s DALL-E train on millions of artworks, from Renaissance classics to modern digital pieces. This massive database allows them to mimic human styles with uncanny accuracy, making it harder than ever to tell the difference. A 2023 Yale University survey found undergraduates could identify whether art was AI-made just 54% of the time.

These models rely on copyrighted art under the banner of “fair use,” which allows the use of copyrighted work without the owner’s permission in certain circumstances—for example, if the work is being used for noncommercial purposes, or if the use doesn’t impact the original work’s value.

But experts say that claim doesn’t hold up.

“These models are training on everyone’s work without their authorization,” says Kevin Madigan, senior vice president of policy and government affairs at the Copyright Alliance, an advocacy group for copyright-holders. “They have the capability to supplant the need for human created work in the marketplace, which is one of the reasons we argue that it should not be considered fair-use to use those things.”

Some AI companies, such as OpenAI, further defend their use of copyrighted material by mentioning that artists can opt-out of their work being used. However, copyright advocates say this is not enough.

Some companies, like OpenAI, argue that artists can opt out of datasets. Advocates counter that’s meaningless if training has already happened. “Copyright is an opt-in regime,” Madigan says. “It’s sort of like putting the toothpaste back in the tube.”

Arguments over how AI should be using copyrighted works are continuing to be hashed out in courts across the country and around the world, but little has been done to settle the debate for artists and they have had few legal successes so far.

What tools are artists using to protect their work?

Since removing artwork from training sets is nearly impossible, some artists are trying to stop AI from accessing it in the first place.

Digital tools, like those developed by the University of Chicago’s Glaze Project, work to “poison” images by modifying the pixels that make up digital artworks in a way that is imperceptible to humans but enough to confuse AI models.

“It’s pretty interesting to be looking at,” says Hanna Foerster, a computer science PhD student at the University of Cambridge who has studied the security of these models. “You can optimize these pixels inside a picture although they seem almost the same to the human eye.”

One of their tools, Nightshade, works by making AI models see different images than the artist originally rendered—a handbag where there once was a cow, for example. Glaze, another of their tools, alters the style instead, making a model see a Jackson Pollock-esque painting where the artist had created a charcoal portrait.

These poisons aim to protect artists’ work once they have already made their way into a training dataset. Others, like robots.txt, work to prevent work from ending up in a dataset. The .txt file tells automated bots combing websites for content where they aren’t allowed to look.

Why aren’t these anti-AI tools enough?

While there are several ways to keep AI models from training on artwork, each comes with trade-offs and vulnerabilities, experts say. Robots.txt, for instance, can block bots scraping the web to build training sets, but it also blocks search engines, hurting artists’ visibility.

“There’s a few different issues with that,” Madigan says. “It’s not practical to say, ‘Don’t put your work online,’ because that’s basically people’s livelihoods, to have a presence online.”

The pixel-level “poisons” that distort images to confuse AI systems also have limits. LightShed, a new tool built by system security researchers, shows just how easily those poisons can be undone.

To build LightShed, the researchers studied how these protective programs alter images. They found consistent patterns in the way poisons were applied, and trained their own model to recognize them by feeding it both clean and poisoned versions of the same images.

“It looks different from image to image, obviously, but it’s still the same process that is applied,” says Foerster, who worked on LightShed during an internship at the Technical University of Darmstadt.

Once their model could reliably detect the poison, it simply stripped it away—revealing the original, unprotected image underneath.

The researchers, who presented their work earlier this month at the USENIX Security Symposium, stress that their goal isn’t to endanger artists or attack protective tools.

“Our work wasn’t intended as a real attack, neither against the tools nor against the artists,” Rieger says. “We just wanted to show the need for more robust detection tools and that, basically, people shouldn’t trust blindly to these tools.”

LightShed is only available for research, but the team hopes it will spark stronger safeguards against AI scraping and manipulation.

“Basically, we are playing nice and informing the people since the bad guys who are not playing as nice as we do wouldn’t inform them,” Rieger says, noting that the team alerted poison creators to the vulnerabilities they found. They also hope word of their findings reaches artists who may rethink how they protect their work.

The future of anti-AI protections

Researchers say that creating a foolproof defense against AI models may be nearly impossible, especially as image generation systems grow more advanced. To block tools like LightShed, image poisons would need to be far more random, but that would risk making the image unrecognizable to humans.

“We have thought about how to construct a kind of perturbation that would be a lot stronger than the ones that exist, but I don’t think we can guarantee at all that they won’t be broken a different way,” Foerster says.

Other approaches, such as cryptographic watermarking, could help artists detect when their work has been used in AI-generated content. But “it’s definitely a very difficult problem,” Foerster adds.

Beyond the technical fixes, some experts argue that the real solution lies in regulation. Madigan says protecting artists will require legislation and legal action to force AI companies to be transparent about their training practices.

In particular, Madigan has hope in bipartisan legislation introduced last month by Senators Josh Hawley (R-Mo.) and Richard Blumenthal (D-Conn.) that would hold tech companies accountable for training models on copyrighted materials.

“AI companies are robbing the American people blind while leaving artists, writers, and other creators with zero recourse,” Hawley said in a statement. “It’s time for Congress to give the American worker their day in court to protect their personal data and creative works.”

If passed, the bill could give artists new legal tools to sue tech companies for copyright infringement, whether individually or through class action lawsuits, and levy financial penalties against firms that misuse copyrighted material.

Even with such challenges, experts stress that artists shouldn’t lose hope. The landscape around AI is evolving quickly—and so are the efforts to defend against its risks.

“Staying on top of these issues, that helps empower the whole community of artists,” Madigan says.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![ROREY releases a powerful song on radical acceptance with "Hurts Me To Hate You" [Video]](https://earmilk.com/wp-content/uploads/2025/08/image-26-800x529.png)