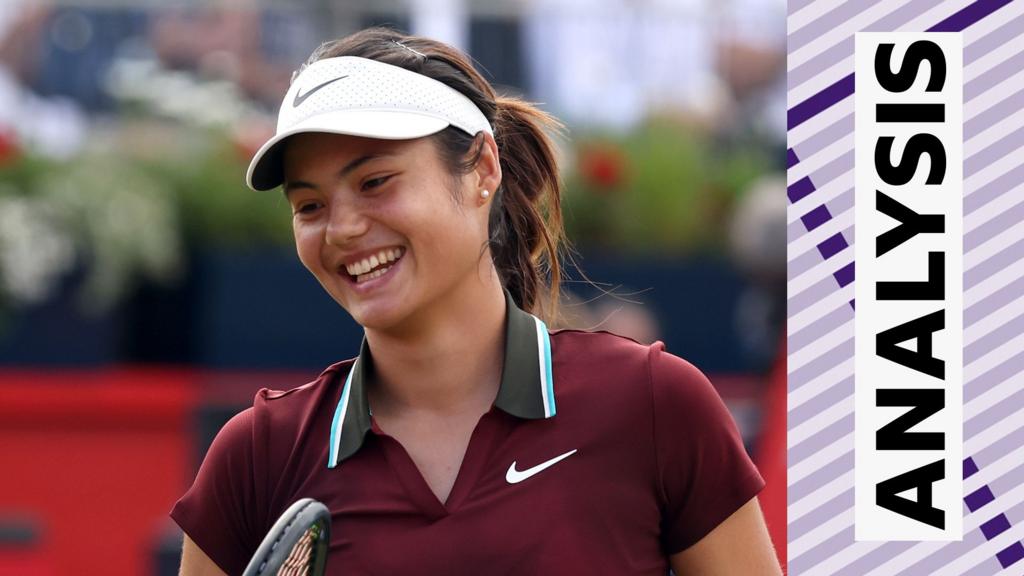

Why companies implementing agentic AI before putting proper governance in place will end up behind, not ahead of, the curve

Agentic AI is the buzzword of 2025. Although technically an “emerging technology,” it feels like companies of all sizes are quickly developing and acquiring AI agents to stay ahead of the curve and competition. Just last week, OpenAI launched a research preview of Codex, the company’s cloud-based software engineering agent or its “most capable AI coding agent yet.” And it’s fair that people are interested and excited.

Transforming industries

From customer service to supply chain management and the legal profession, AI agents are set to transform industries across the board and are already showing us that they can be pervasive across both consumer and enterprise environments, bringing AI fully into the mainstream. Unlike chatbots and image generators, which provide answers, but require prompts, AI agents execute multistep tasks on behalf of users. In 2025, these autonomous software programs will dramatically change how people interact with technology and how businesses operate.

This aligns with Forrester’s latest findings, which had agentic AI at the top of its recent Top 10 Emerging Technologies for 2025, highlighting the power and potential of this emerging trend. However, as the report also points out, the rewards come with big risks and challenges. Let’s dive into these as well as why companies must prioritize governance before development and implementation in order to stay ahead of, not behind, the curve and their competition.

A Governance-First Approach

In just three years, at least 15% of day-to-day work decisions will be made autonomously by AI agents—up from virtually 0% in 2024. This prediction by Gartner, while promising, sits alongside another key stat: 25% of enterprise breaches will be linked to AI agent abuse. As mentioned above, the rapid and widespread adoption of AI agents, while exciting, comes with complex challenges, such as shadow AI, which is why companies must prioritize a governance-first approach here.

So what is it about AI agents that makes them particularly challenging to control?

Short answer: their ability to operate autonomously. Long answer: this technology makes it difficult for organizations to have visibility over four things:

- Who owns which agent

- What department oversees them

- What data they have access to

- What actions the agent can take

- How do you effectively govern them

A comprehensive approach

This is where unified governance can step in. With a comprehensive governance framework, companies can ensure that AI agents operate responsibly and are aligned with organizational standards and policies. The alternative: a lack of governance framework for AI agents can mishandle sensitive data, violate compliance regulations, and make decisions misaligned with business objectives.

Let’s use a real-world example: you are a CEO for a major organization. Your company builds and introduces an AI-powered assistant to help automate workflows and save you time. Now imagine that the assistant gains access to your confidential files. Without guidance or governance, the assistant summarizes sensitive financial projections and closed-door board discussions and shares them with third-party vendors or unauthorized employees. This is definitely a worst-case scenario, but it highlights the importance for a solid governance framework.

Here’s a helpful governance checklist:

- Establish guidelines that clearly define acceptable use and assign accountability.

- Carry out regular reviews to help identify and mitigate potential risks and threats.

- Appoint the right stakeholder to foster transparency and build trust in how AI agents are used internally and externally.

Blurred lines

According to Sunil Soares, Founder of YDC, Agentic AI will drive the need for new governance approaches. As more applications include embedded AI and AI agents, the line between applications and AI use cases will become increasingly blurred. I couldn’t agree more.

Whether you develop AI agents internally or partner with a third-party vendor, this technology will unlock significant value. But the challenges are not one-size-fits-all and will not go away. And while the human element remains important, manual oversight on its own is not sufficient or realistic when it comes to scale and size.

Therefore, when you build out your governance framework, ensure that you have automated monitoring tools in place that detect and correct violations of policies, record decisions for greater transparency, and escalate the complex cases that require additional oversight, such as a human-in-the-loop. A centralized governance framework ensures accountability, risk assessment, and ethical compliance. Like everything else in life, you need to create and establish boundaries.

And don’t worry—implementing a governance framework first won’t slow innovation down. When you find the right balance between innovation and risk management, you stay ahead of the curve and competition, leaving room for more cutting-edge AI agents and fewer headaches. For a perfect pill, deploy a unified governance platform for data and AI, as it will be the key to managing and ensuring AI agents don’t become the next shadow IT.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0