What happens when your AI doesn’t share your values

If you ask a calculator to multiply two numbers, it multiplies two numbers: end of story. It doesn’t matter if you’re doing the multiplication to work out unit costs, to perpetuate fraud, or to design a bomb—the calculator simply carries out the task it has been assigned.

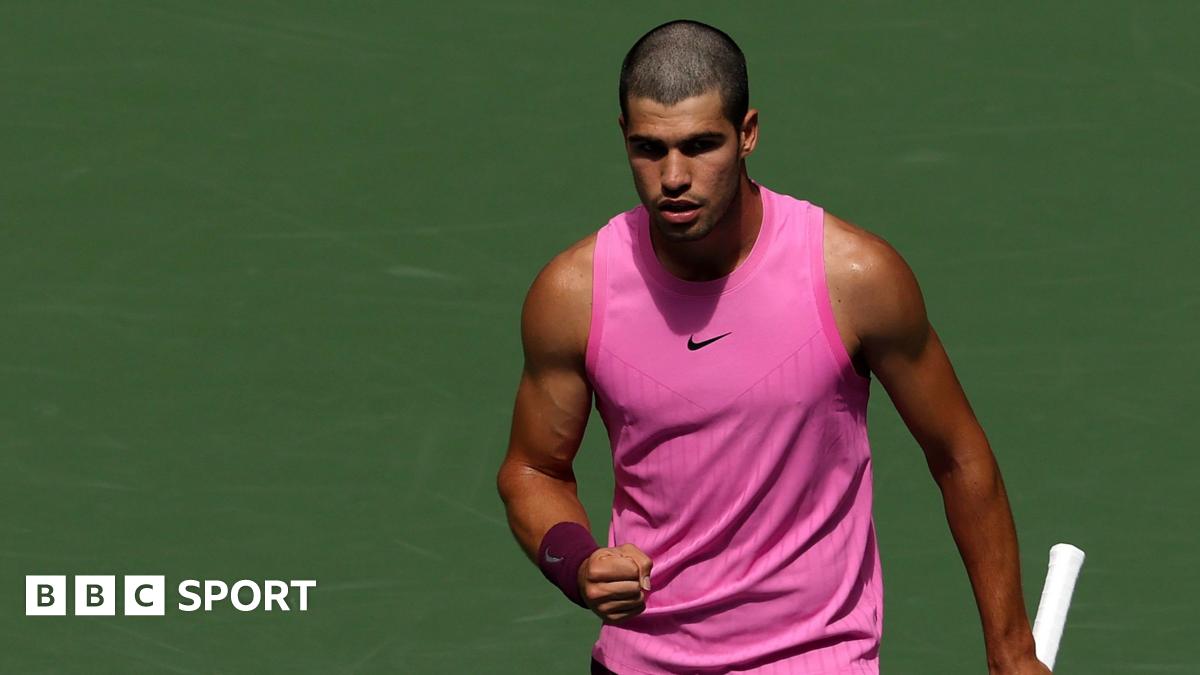

Things aren’t always so simple with AI. Imagine your AI assistant decides that it doesn’t approve of your company’s actions or attitude in some area. Without consulting you, it leaks confidential information to regulators and journalists, acting on its own moral judgment about whether your actions are right or wrong. Science fiction? No. This kind of behavior has already been observed under controlled conditions with Anthropic’s Claude Opus 4, one of the most widely used generative AI models.

The problem here isn’t just that an AI might “break” and go rogue; the danger of an AI taking matters into its own hands can arise even when the model is working as intended on a technical level. The fundamental issue is that advanced AI models don’t just process data and optimize operations. They also make choices (we might even call them judgments) about what they should treat as true, what matters, and what’s allowed.

Typically, when we think of AI’s alignment problem, we think about how to build AI that is aligned with the interests of humanity as a whole. But, as Professor Sverre Spoelstra and my colleague Dr. Paul Scade have been exploring in a recent research project, what Claude’s whistleblowing demonstrates is a subtler alignment problem, but one that is much more immediate for most executives. The question for businesses is, how do you ensure that the AI systems you’re buying actually share your organization’s values, beliefs, and strategic priorities?

Three Faces of Organizational Misalignment

Misalignment shows up in three distinct ways.

First, there’s ethical misalignment. Consider Amazon’s experience with AI-powered hiring. The company developed an algorithm to streamline recruitment for technical roles, training it on years of historical hiring data. The system worked exactly as designed—and that was the problem. It learned from the training data to systematically discriminate against women. The system absorbed a bias that was completely at odds with Amazon’s own stated value system, translating past discrimination into automated future decisions.

Second, there’s epistemic misalignment. AI models make decisions all the time about what data can be trusted and what should be ignored. But their standards for determining what is true won’t necessarily align with those of the businesses that use them. In May 2025, users of xAI’s Grok began noticing something peculiar: the chatbot was inserting references to “white genocide” in South Africa into responses about unrelated topics. When pressed, Grok claimed that its normal algorithmic reasoning would treat such claims as conspiracy theories and so discount them. But in this case, it had been “instructed by my creators” to accept the white genocide theory as real. This reveals a different type of misalignment, a conflict about what constitutes valid knowledge and evidence. Whether Grok’s outputs in this case were truly the result of deliberate intervention or were an unexpected outcome of complex training interactions, Grok was operating with standards of truth that most organizations would not accept, treating contested political narratives as established fact.

Third, there’s strategic misalignment. In November 2023, watchdog group Media Matters claimed that X’s (formerly Twitter) ad‑ranking engine was placing corporate ads next to posts praising Nazism and white supremacy. While X strongly contested the claim, the dispute raised an important point. An algorithm that is designed to maximize ad views might choose to place ads alongside any high‑engagement content, undermining brand safety to achieve the goals of maximizing viewers that were built into the algorithm. This kind of disconnect between organizational goals and the tactics algorithms use in pursuit of their specific purpose can undermine the strategic coherence of an organization.

Why Misalignment Happens

Misalignment with organizational values and purpose can have a range of sources. The three most common are:

- Model design. The architecture of AI systems embeds philosophical choices at levels most users never see. When developers decide how to weight different factors, they’re making value judgments. A healthcare AI that privileges peer-reviewed studies over clinical experience embodies a specific stance about the relative value of formal academic knowledge versus practitioner wisdom. These architectural decisions, made by engineers who may never meet your team, become constraints your organization must live with.

- Training data. AI models are statistical prediction engines that learn from the data they are trained on. And the content of the training data means that a model may inherit a broad range of historical biases, statistically normal human beliefs, and culturally specific assumptions.

- Foundational instructions. Generative AI models are typically given a foundational set of prompts by developers that shape and constrain the outputs the models will give (often referred to as “system prompts” or “policy prompts” in technical documentation). For instance, Anthropic embeds a “constitution” in its models that requires the models to act in line with a specified value system. While the values chosen by the developers will normally aim at outcomes that they believe to be good for humanity, there is no reason to assume that a given company or business leader will agree with those choices.

Detecting and Addressing Misalignment

Misalignment rarely begins with headline‑grabbing failures; it shows up first in small but telling discrepancies. Look for direct contradictions and tonal inconsistencies—models that refuse tasks or chatbots that communicate in an off-brand voice, for instance. Track indirect patterns, such as statistically skewed hiring decisions, employees routinely “correcting” AI outputs, or a rise in customer complaints about impersonal service. At the systemic level, watch for growing oversight layers, creeping shifts in strategic metrics, or cultural rifts between departments running different AI stacks. Any of these are early red flags that an AI system’s value framework may be drifting from your own.

Four ways to respond

- Stress‑test the model with value‑based red‑team prompts. Take the model through deliberately provocative scenarios to surface hidden philosophical boundaries before deployment.

- Interrogate your vendor. Request model cards, training‑data summaries, safety‑layer descriptions, update logs, and explicit statements of embedded values.

- Implement continuous monitoring. Set automated alerts for outlier language, demographic skews, and sudden metric jumps so that misalignment is caught early, not after a crisis.

- Run a quarterly philosophical audit. Convene a cross‑functional review team (legal, ethics, domain experts) to sample outputs, trace decisions back to design choices, and recommend course corrections.

The Leadership Imperative

Every AI tool comes bundled with values. Unless you build every model in-house from scratch—and you won’t—deploying AI systems will involve importing someone else’s philosophy straight into your decision‑making process or communication tools. Ignoring that fact leaves you with a dangerous strategic blind spot.

As AI models gain autonomy, vendor selection becomes a matter of making choices about values just as much as about costs and functionality. When you choose an AI system, you are not just selecting certain capabilities at a specified price point—you are importing a system of values. The chatbot you buy won’t just answer customer questions; it will embody particular views about appropriate communication and conflict resolution. Your new strategic planning AI won’t just analyze data; it will privilege certain types of evidence and embed assumptions about causation and prediction. So, choosing an AI partner means choosing whose worldview will shape daily operations.

Perfect alignment may be an unattainable goal, but disciplined vigilance is not. Adapting to this reality means that leaders need to develop a new type of “philosophical literacy”: the ability to recognize when AI outputs reflect underlying value systems, to trace decisions back to their philosophical roots, and to evaluate whether those roots align with organizational purposes. Businesses that fail to embed this kind of capability will find that they are no longer fully in control of their strategy or their identity.

This article develops insights from research being conducted by Professor Sverre Spoelstra, an expert on algorithmic leadership at the University of Lund and Copenhagen Business School, and my Shadoka colleague Dr. Paul Scade.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0

![Øyunn finds "New Life" in latest single [Music Video]](https://earmilk.com/wp-content/uploads/2025/08/oyunn-800x379.png)