AI embarrassed them at work. Don’t let this happen to you

Dearest reader,

I hope this article finds you in good health.

I deeply desire also that if you use generative AI to boost your productivity at work, that you, for all that is good and holy, review everything it produces, lest it hallucinate data or quotes or address your boss by the wrong name—and you fall on your face and embarrass yourself.

Sincerely,

Your unchecked AI results

AI is taking the workforce by storm and stealth, as the rules for how to use it are still being written and employees are left to experiment. Many employees are under pressure to adopt AI: Some companies such as Shopify and Duolingo are requiring employees to use AI while others are ratcheting up productivity expectations so high that some workers may be using it just to meet demands. This creates an environment ripe for making mistakes: we’ve seen Grok spew hate speech on X, and more recently an AI agent deleted an entire database of executive contacts belonging to investor Jason Lemkin.

Funnily enough, no one wants to share their own AI-induced flub but they have a story to tell from someone else. These AI nightmares range from embarrassing to potentially fireable offenses, but together they reveal why there always needs to be a human in the loop.

The Email You Obviously Didn’t Write

Failing to review AI-generated content seems to be the most common mistake workers are making, and it’s producing errors big and small. On the small side, one worker in tech sales who asked to remain anonymous tells Fast Company her colleague used to ask ChatGPT to write “natural-sounding sales emails,” then contacted clients with Dickensian messages that began, “I hope this email finds you in good health.”

The Slackbot Gone Awry

Similarly, Clemens Rychlik, COO at marketing firm Hello Operator, says a colleague let ChatGPT draft Slack replies largely unchecked, and addressed him as Clarence instead of Clemens. When Clemens replied in good fun, calling his colleague the wrong name too, “their reaction was, of course, guilt and shame—and the responses after that were definitely ‘human.’”

The Inappropriate Business Recommendation

On the larger side, some people are using AI to generate information for clients without checking the results, which compromises the quality of their work. Alex Smereczniak is the CEO of the startup Franzy, a marketplace for buying and selling franchise opportunities. His company uses a specially trained LLM on the back end to help customers find franchises, but Smereczniak says their clients often don’t know this.

So when one client asked to see opportunities for wellness-focused franchises, and the account manager recommended she open a Dave’s Hot Chicken, she was less than pleased. Smereczniak says the employee came clean and told the customer he had used AI to generate her matches.

“We took a closer look and realized the model had been weighting certain factors like profitability and brand growth too heavily, without enough context on the prospect’s personal values,” says Smereczniak. “We quickly updated the model’s training data and reweighted a few inputs to better reflect those lifestyle preferences.” When the Franzy team fired up the AI again, the model made better recommendations, and the customer was happy with the new recommendations.

“At a startup, things are moving a million miles a minute,” Smereczniak says. “I think, in general, it’s good for us all to remind ourselves when we are doing something client-facing or externally. It’s okay to slow down and double check—and triple check—the AI.”

The Hallucinated Source

Some companies have used AI mistakes to improve their work processes, which was the case at Michelle’s employer, a PR firm. (Michelle is a pseudonym as she’s not technically allowed to embarrass her employer in writing.)

Michelle tells Fast Company that a colleague used Claude, Anthropic’s AI assistant, to generate a ghostwritten report on behalf of a client. Unfortunately, Claude hallucinated and cited imaginary sources and quoted imaginary experts. “The quote in this piece was attributed to a made-up employee from one of the top three largest asset management firms in the world,” she says. But the report was published anyway.

Michelle’s company found out by way of an angry email from the asset management firm. “We were obviously all mortified,” Michelle says. “This had never happened before. We thought it was a process that would take place super easily and streamline the content creation process. But unfortunately, this snafu took place instead.”

Ultimately, the company saved face all around by simply owning up to the error and successfully retained the account. The PR firm told the client and the asset management firm exactly how the error occurred and assured them it wouldn’t happen again thanks to new protocols.

Despite the flub, the firm didn’t ban the use of AI for content creation—they want to be on the leading edge of tech—nor did it solely blame the employee (who kept their job), but it did install a series of serious checks in its workflow, and now all AI-generated content must be reviewed by at least four employees. It’s a mistake that could have happened to anyone, Michelle says. “AI is a powerful accelerator, but without human oversight, it can push you right off a cliff.”

The AI-Powered Job Application

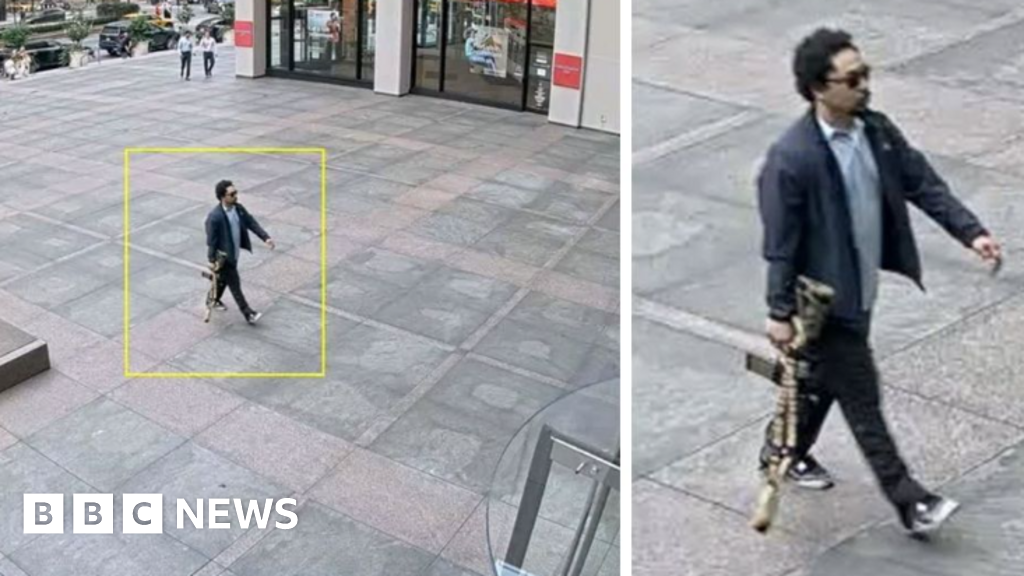

AI use isn’t just happening on the job, sometimes it’s happening during the job interview itself. Reality Defender, a company that makes deepfake detection software, asks its job candidates to complete take-home projects as part of the interview process. Ironically it’s not uncommon for those take-home tests to be completed with AI assistance.

As far as Reality Defender is concerned, “everyone assumes, and rightfully so, that AI is being used in either the conception or full on completion for a lot of tasks these days,” a rep for the company tells Fast Company. But it’s one thing to use AI to augment your work by polishing a résumé or punching up a cover letter, and another to have it simply do the work for you.

Reality Defender wants candidates to be transparent. “Be very upfront about your usage of AI,” they said. “In fact, we encourage that discretion and disclosure and see that as a positive, not a negative. If you are out there saying, ‘Hey, I did this with artificial intelligence, and it’s gotten me to here, but I am perfectly capable of doing this without artificial intelligence, albeit in a different way,’ you are not only an honest person, but it shows your level of proficiency with artificial intelligence.”

“Personally, I don’t think it’s necessarily bad to use [AI] to some extent, but at the very, very least, you want to check what’s being written and reviewed before we share it,” says Rychlik at Hello Operator. “More broadly, I ask everyone to pause regularly on this because if your first instinct is always ‘ask GPT,’ you risk worsening your critical thinking capabilities.”

Rychlik is tapping into a common sentiment we noticed. On the whole, companies are trying to use mistakes as a learning opportunity to ask for transparency and improve processes.

We’re in an age of AI experimentation, and smart companies understand mistakes are the cost of experimentation. In this experimental stage, organizations and employees using AI at work look tech-savvy rather than careless, and we’re just finding out where the boundaries are. For now, many workers seem to have adopted a policy of asking for forgiveness rather than permission.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0